Frequently asked questions

General questions

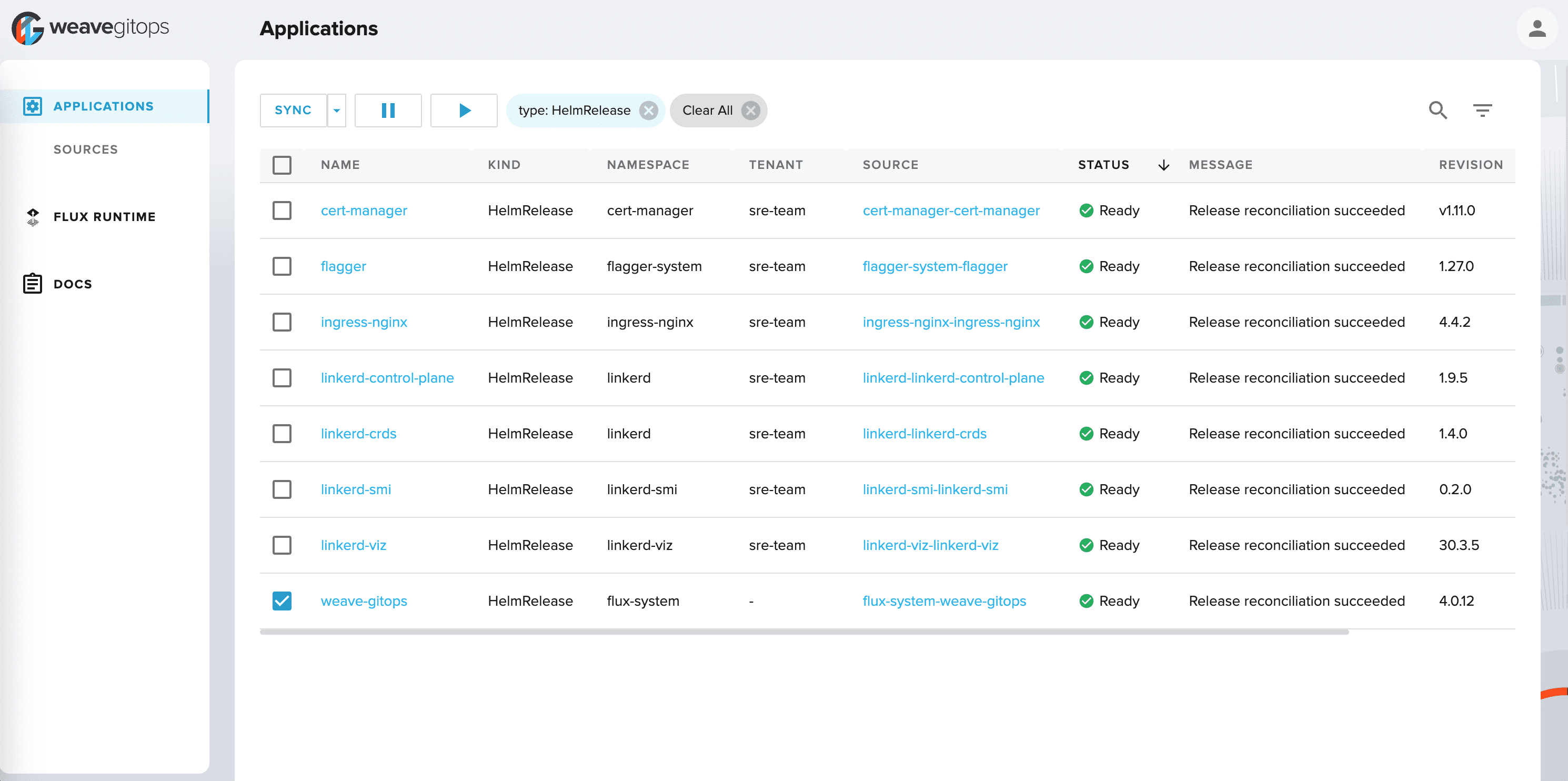

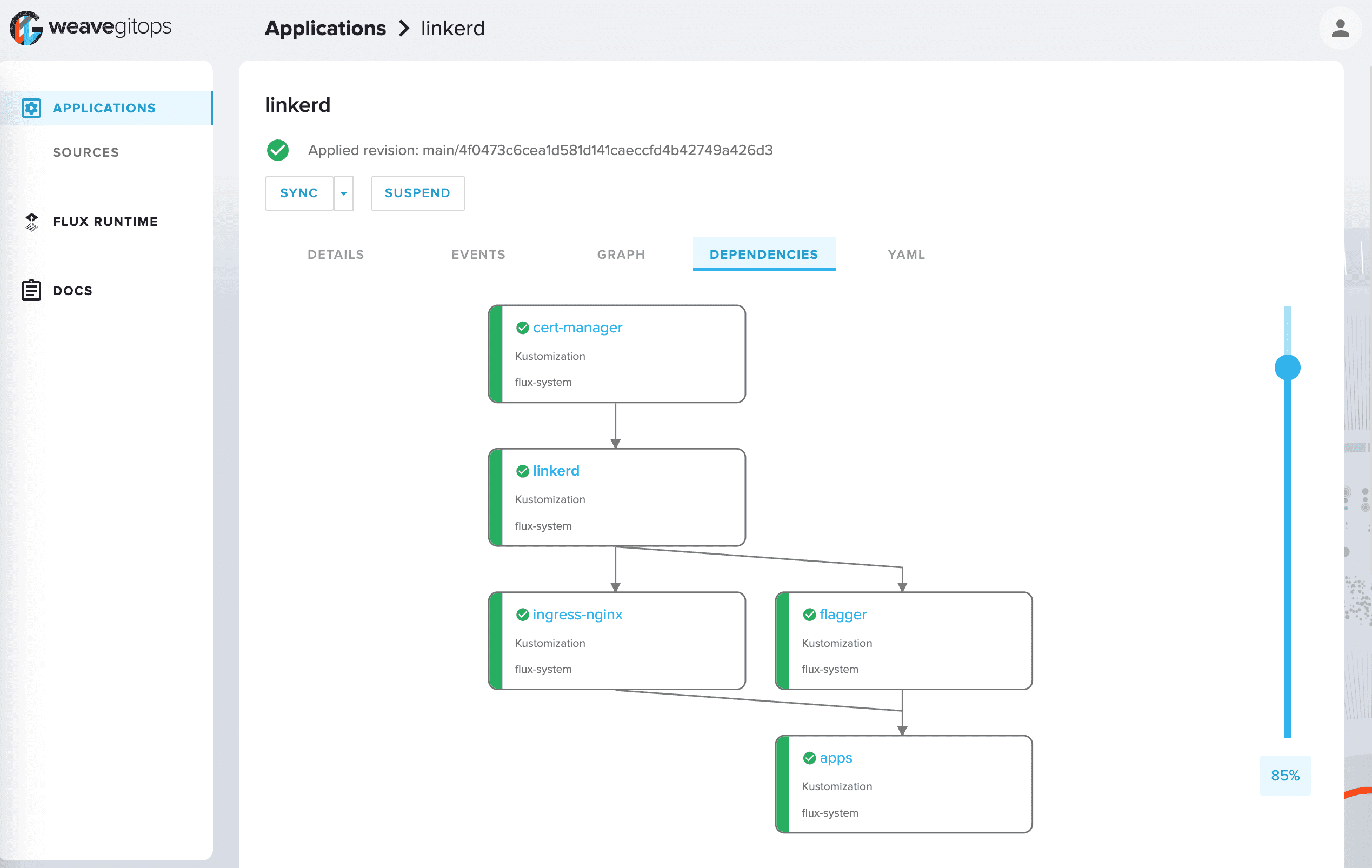

Does Flux have a UI / GUI?

The Flux project does not provide a UI of its own, but there are a variety of UIs for Flux in the Flux Ecosystem.

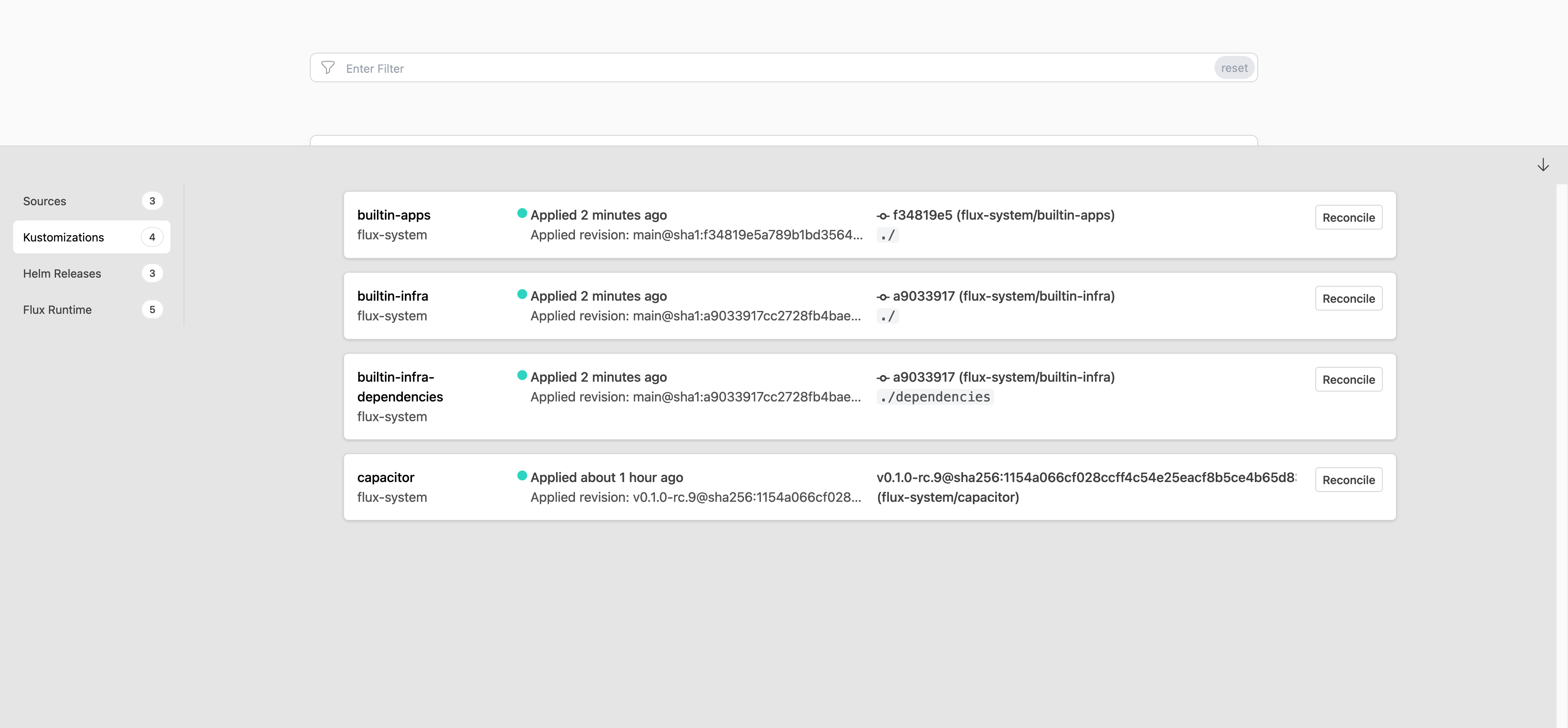

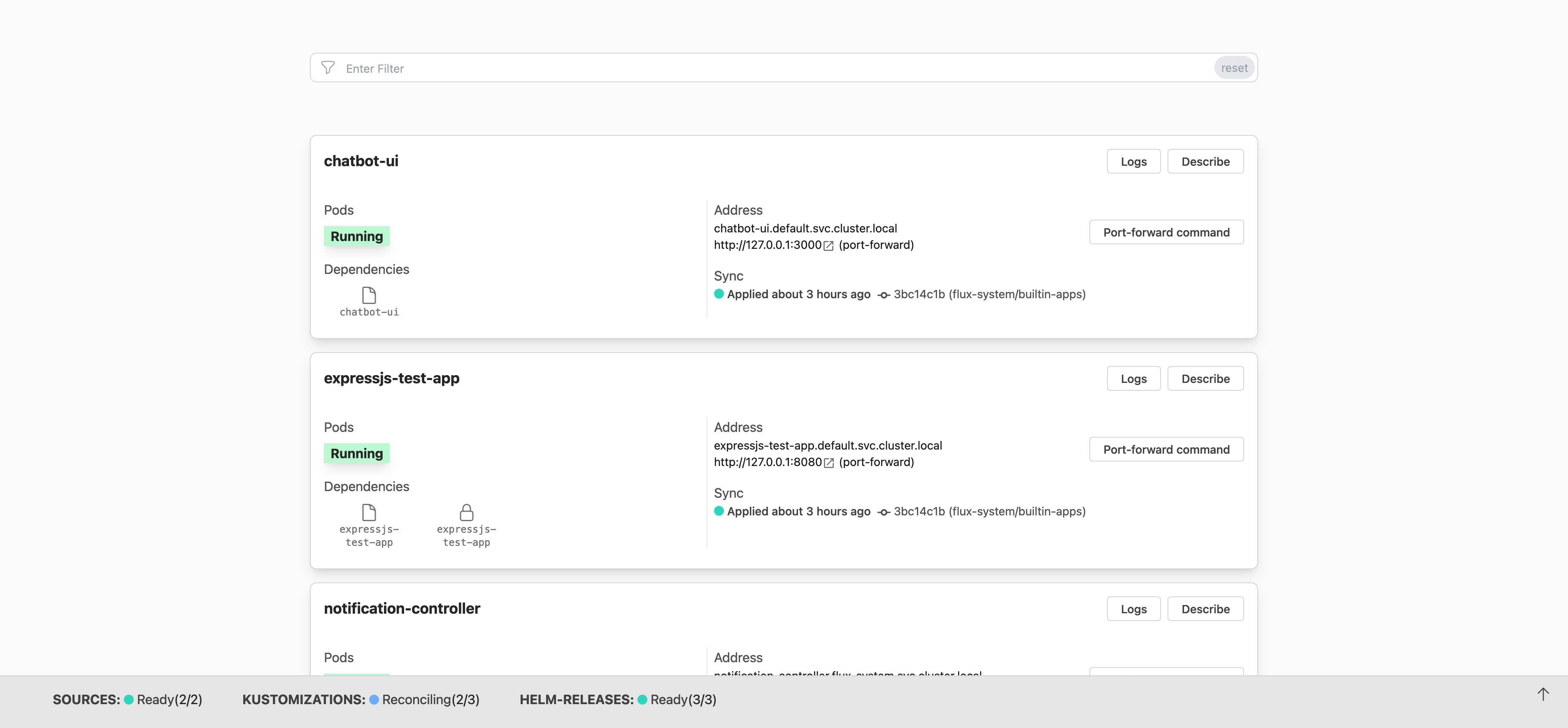

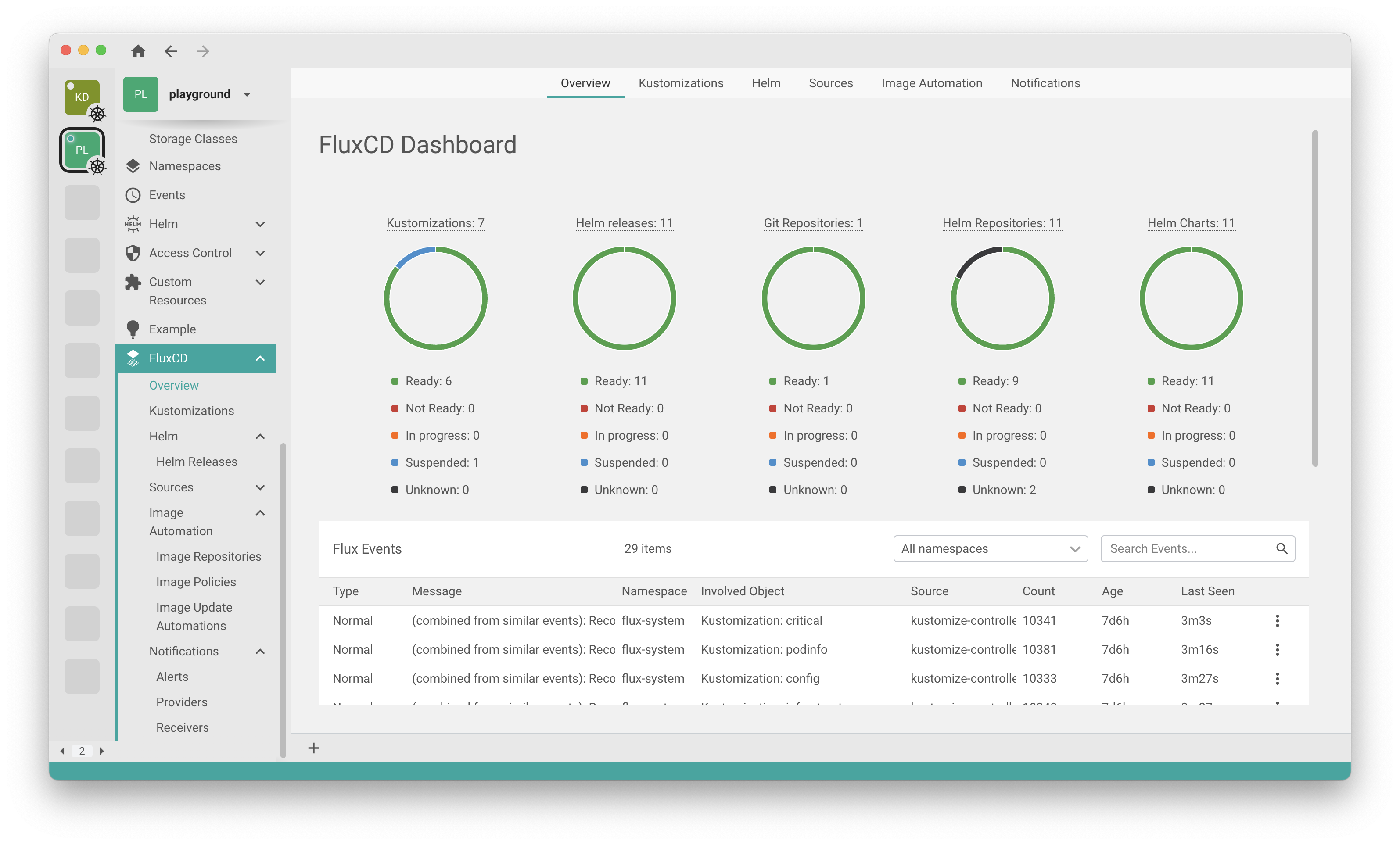

Capacitor

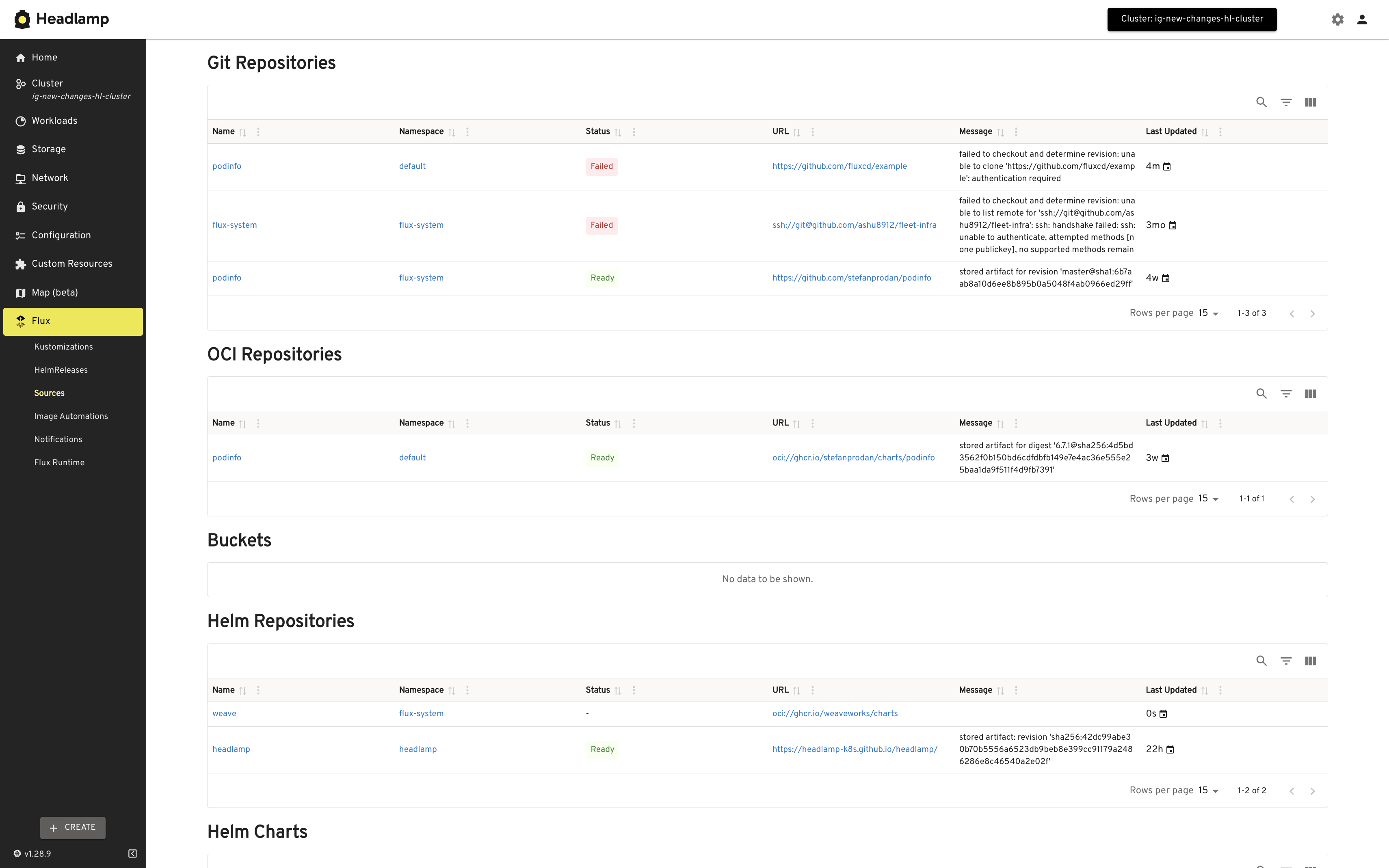

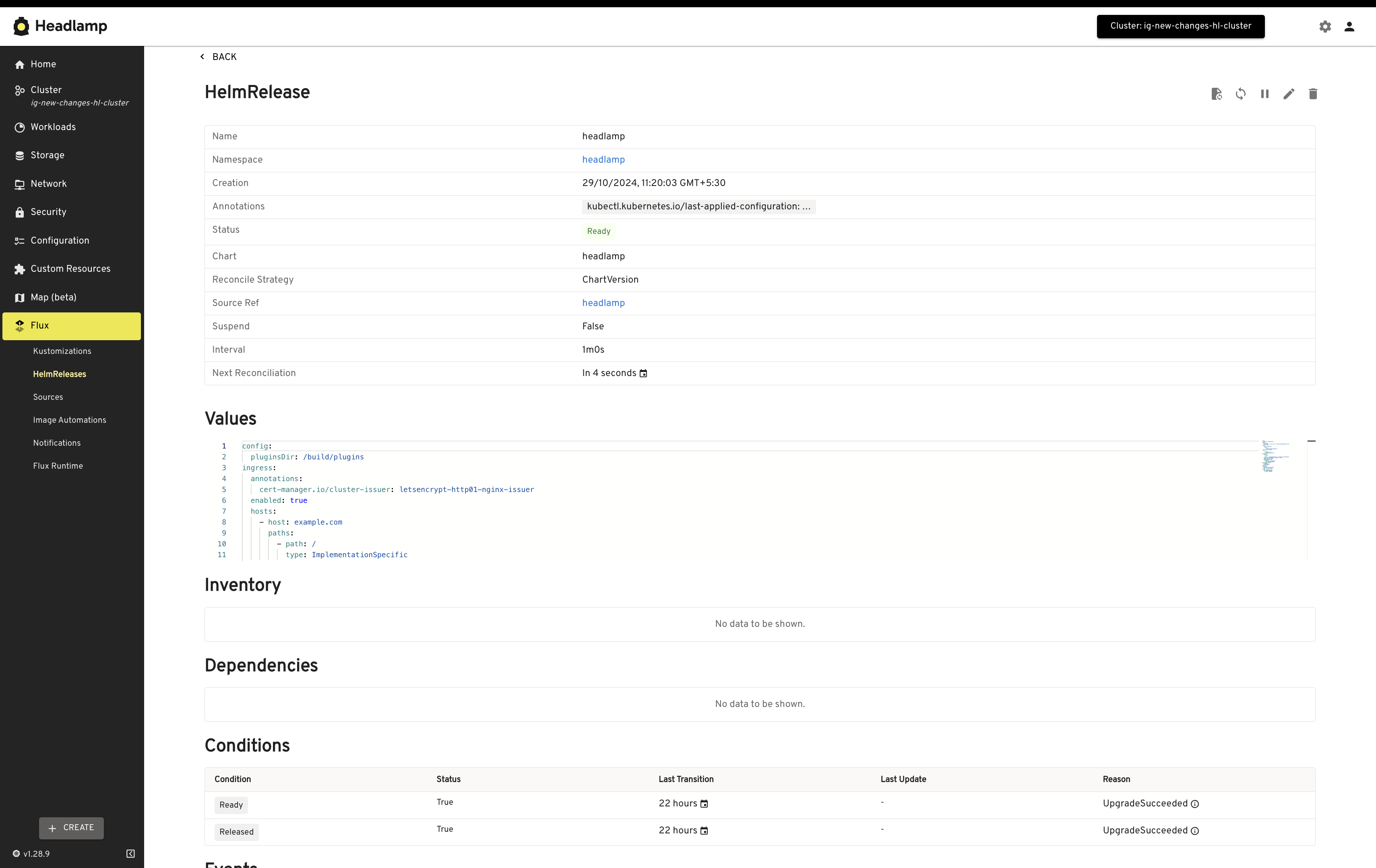

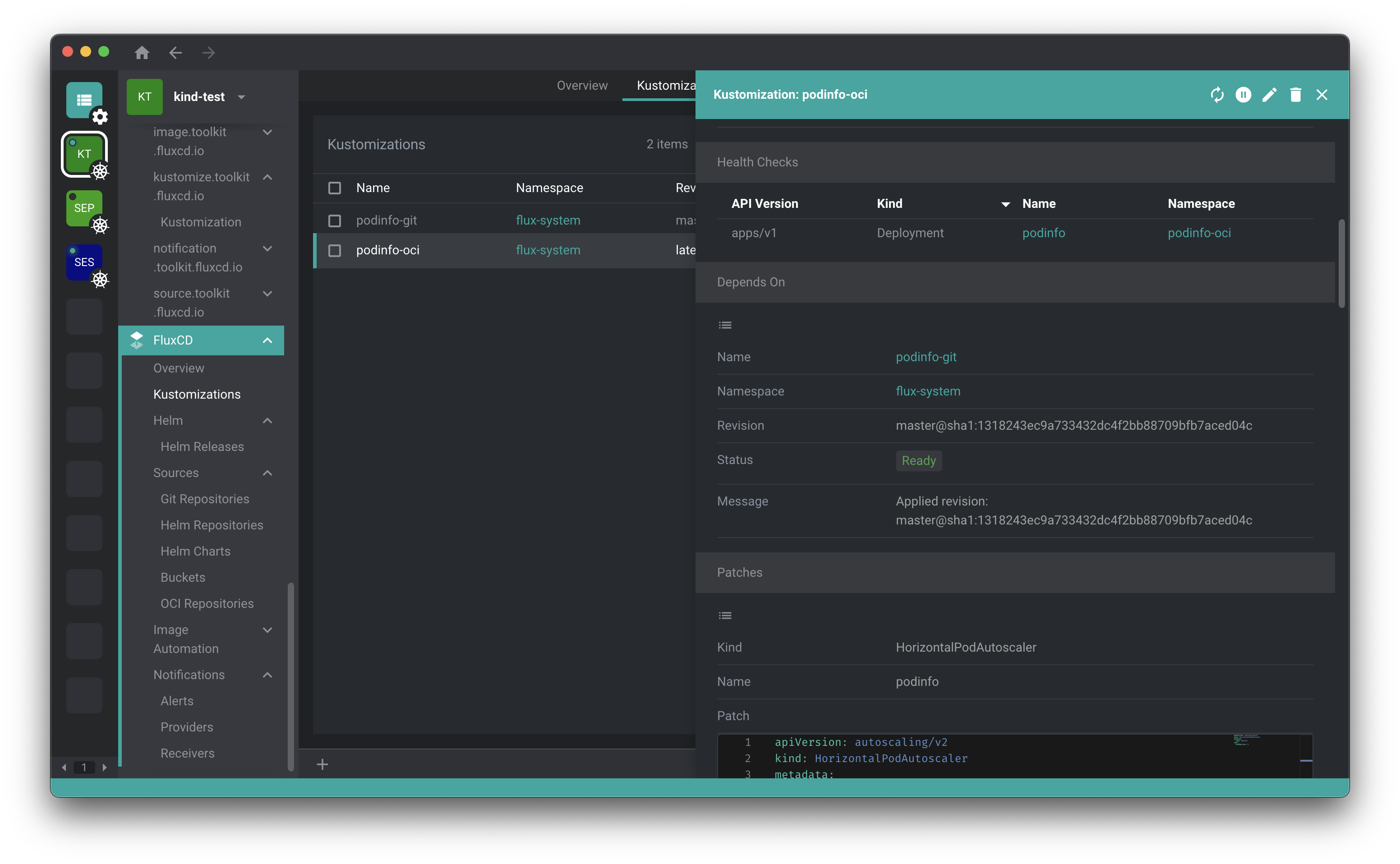

Flux Plugin for Headlamp

Freelens

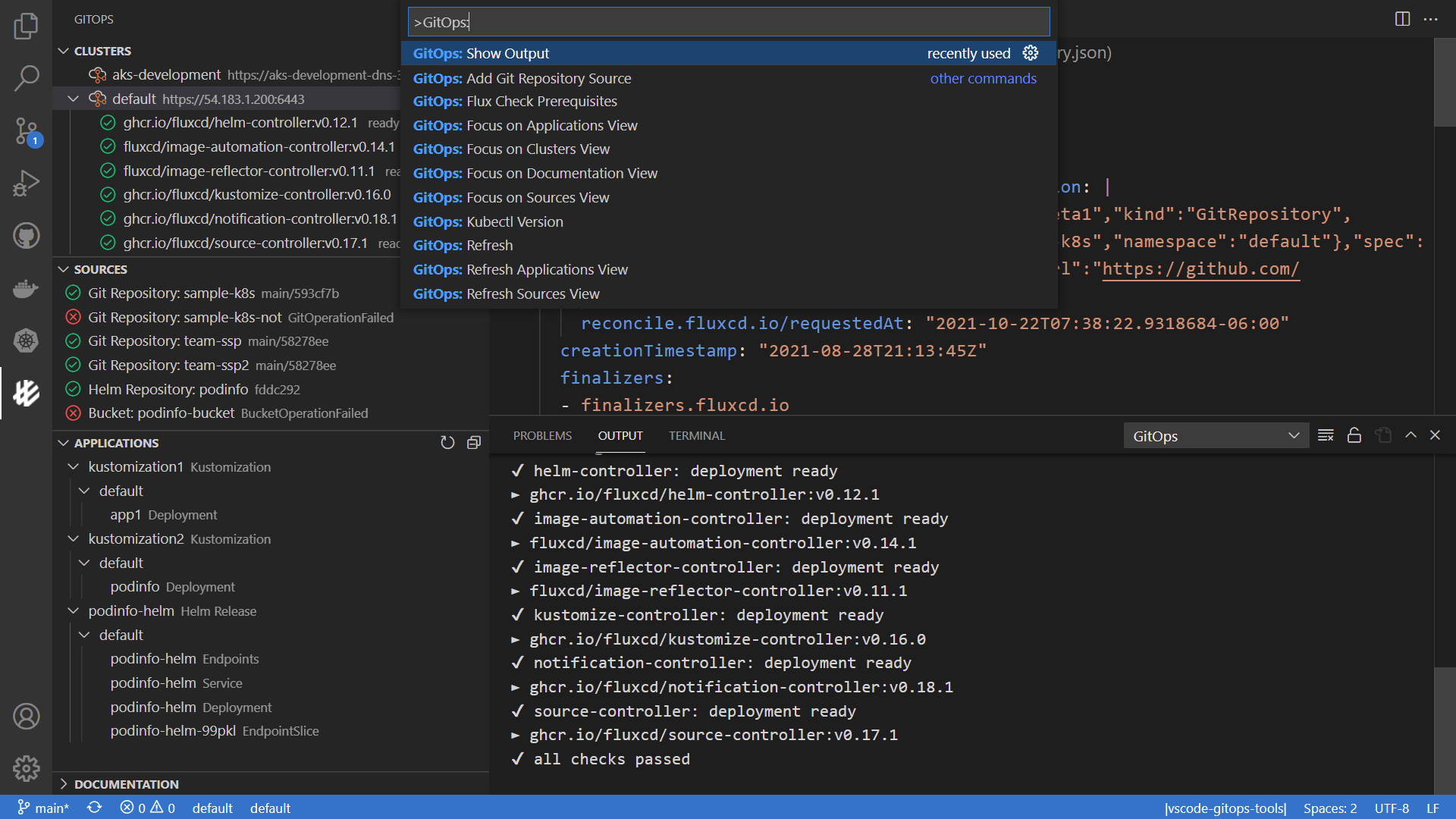

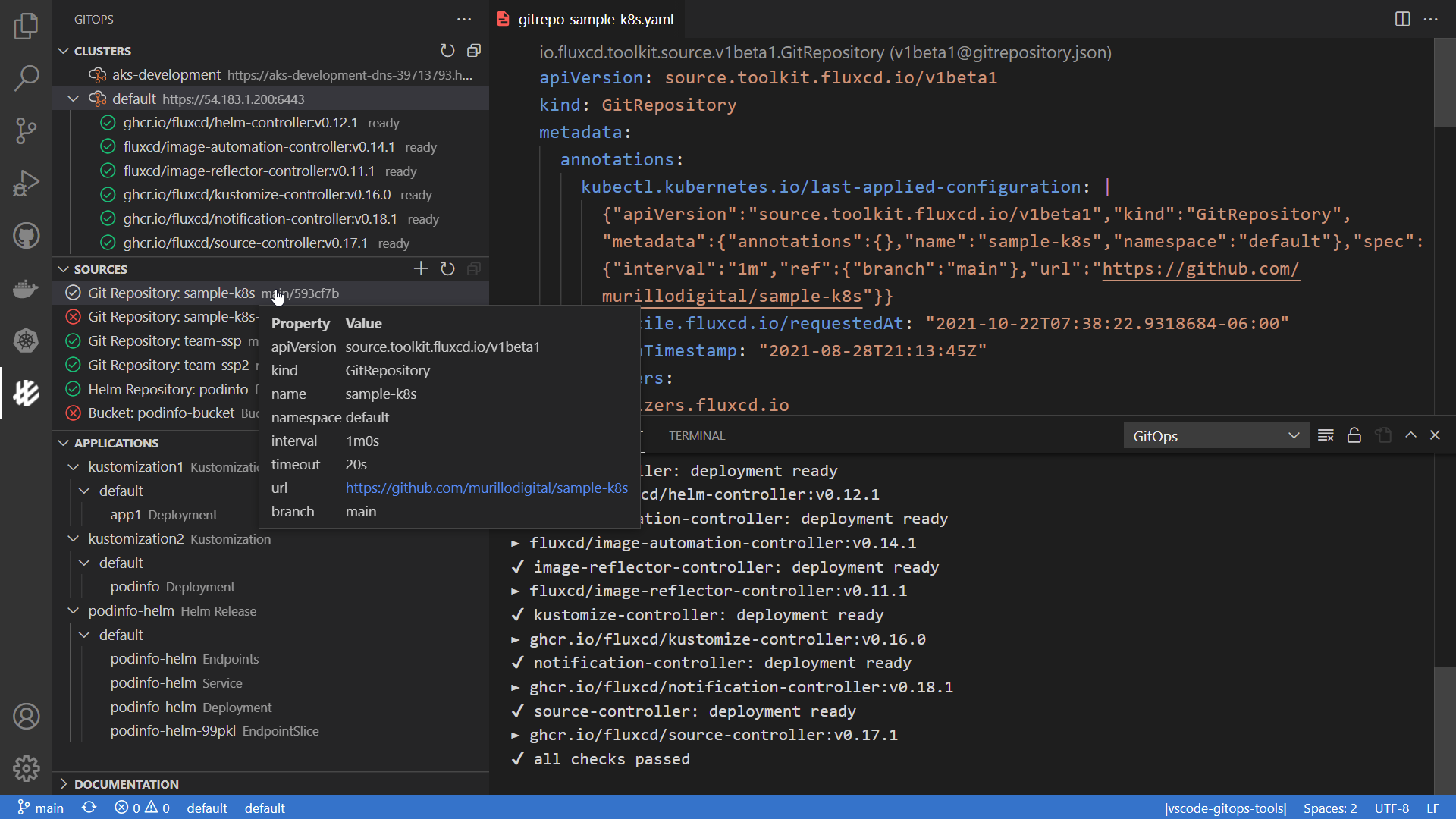

VS Code GitOps Tools

Weave GitOps

Where can I find information about Flux release cadence and supported versions?

Flux is at least released at the same rate as Kubernetes, following their cadence of three minor releases per year.

For Flux the CLI and its controllers, we support the last three minor releases. Critical bug fixes, such as security fixes, may be back-ported to those three minor versions as patch releases, depending on severity and feasibility.

For more details please see the Flux release documentation.

Kustomize questions

Are there two Kustomization types?

Yes, the kustomization.kustomize.toolkit.fluxcd.io is a Kubernetes

custom resource

while kustomization.kustomize.config.k8s.io is the type used to configure a

Kustomize overlay.

The kustomization.kustomize.toolkit.fluxcd.io object refers to a kustomization.yaml

file path inside a Git repository or Bucket source.

How do I use them together?

Assuming an app repository with ./deploy/prod/kustomization.yaml:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: default

resources:

- deployment.yaml

- service.yaml

- ingress.yaml

Define a source of type gitrepository.source.toolkit.fluxcd.io

that pulls changes from the app repository every 5 minutes inside the cluster:

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: my-app

namespace: default

spec:

interval: 5m

url: https://github.com/my-org/my-app

ref:

branch: main

Then define a kustomization.kustomize.toolkit.fluxcd.io that uses the kustomization.yaml

from ./deploy/prod to determine which resources to create, update or delete:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: my-app

namespace: default

spec:

interval: 15m

path: "./deploy/prod"

prune: true

sourceRef:

kind: GitRepository

name: my-app

What is a Kustomization reconciliation?

In the above example, we pull changes from Git every 5 minutes,

and a new commit will trigger a reconciliation of

all the Kustomization objects using that source.

Depending on your configuration, a reconciliation can mean:

- generating a kustomization.yaml file in the specified path

- building the kustomize overlay

- decrypting secrets

- validating the manifests with client or server-side dry-run

- applying changes on the cluster

- health checking of deployed workloads

- garbage collection of resources removed from Git

- issuing events about the reconciliation result

- recoding metrics about the reconciliation process

The 15 minutes reconciliation interval, is the interval at which you want to undo manual changes

.e.g. kubectl set image deployment/my-app by reapplying the latest commit on the cluster.

Note that a reconciliation will override all fields of a Kubernetes object, that diverge from Git.

For example, you’ll have to omit the spec.replicas field from your Deployments YAMLs if you

are using a HorizontalPodAutoscaler that changes the replicas in-cluster.

Can I use repositories with plain YAMLs?

Yes, you can specify the path where the Kubernetes manifests are,

and kustomize-controller will generate a kustomization.yaml if one doesn’t exist.

Assuming an app repository with the following structure:

├── deploy

│ └── prod

│ ├── .yamllint.yaml

│ ├── deployment.yaml

│ ├── service.yaml

│ └── ingress.yaml

└── src

Create a GitRepository definition and exclude all the files that are not Kubernetes manifests:

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: my-app

namespace: default

spec:

interval: 5m

url: https://github.com/my-org/my-app

ref:

branch: main

ignore: |

# exclude all

/*

# include deploy dir

!/deploy

# exclude non-Kubernetes YAMLs

/deploy/**/.yamllint.yaml

Then create a Kustomization definition to reconcile the ./deploy/prod dir:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: my-app

namespace: default

spec:

interval: 15m

path: "./deploy/prod"

prune: true

sourceRef:

kind: GitRepository

name: my-app

With the above configuration, source-controller will pull the Kubernetes manifests

from the app repository and kustomize-controller will generate a

kustomization.yaml including all the resources found with ./deploy/prod/**/*.yaml.

The kustomize-controller creates kustomization.yaml files similar to:

cd ./deploy/prod && kustomize create --autodetect --recursive

How can I safely move resources from one dir to another?

To move manifests from a directory synced by a Flux Kustomization to another dir synced by a different Kustomization, first you need to disable garbage collection then move the files.

Assuming you have two Flux Kustomization named app1 and app2, and you want to move a

deployment manifests named deploy.yaml from app1 to app2:

- Disable garbage collection by setting

prune: falsein theapp1Flux Kustomization. Commit, push and reconcile the changes e.g.flux reconcile ks flux-system --with-source. - Verify that pruning is disabled in-cluster with

flux export ks app1. - Move the

deploy.yamlmanifest to theapp2dir, then commit, push and reconcile e.g.flux reconcile ks app2 --with-source. - Verify that the deployment is now managed by the

app2Kustomization withflux tree ks apps2. - Reconcile the

app1Kustomization and verify that the deployment is no longer managed by itflux reconcile ks app1 && flux tree ks app1. - Finally, enable garbage collection by setting

prune: trueinapp1Kustomization, then commit and push the changes upstream.

Another option is to disable the garbage collection of the objects using an annotation:

- Disable garbage collection in the

deploy.yamlby adding thekustomize.toolkit.fluxcd.io/prune: disabledannotation. - Commit, push and reconcile the changes e.g.

flux reconcile ks flux-system --with-source. - Verify that the annotation has been applied

kubectl get deploy/app1 -o yaml. - Move the

deploy.yamlmanifest to theapp2dir, then commit, push and reconcile e.g.flux reconcile ks app2 --with-source. - Reconcile the

app1Kustomization and verify that the deployment is no longer managed by itflux reconcile ks app1 && flux tree ks app1. - Finally, enable garbage collection by setting

kustomize.toolkit.fluxcd.io/prune: enabled, then commit and push the changes upstream.

How can I safely rename a Flux Kustomization?

If a Flux Kustomization has spec.prune set to true and you rename the object, then all reconciled

workloads will be deleted and recreated.

To safely rename a Flux Kustomization, first set spec.prune to false and sync the change on the cluster.

To make sure that the change has been acknowledged by Flux, run flux export kustomization <name>

and check that pruning is disabled. Finally, rename the Kustomization and re-enabled pruning. Flux will

delete the old Kustomization and transfer ownership of the reconciled resources to the new Kustomization.

You can run flux tree kustomization <new-name> to see which resources are managed by Flux.

Why are kubectl edits rolled back by Flux?

If you use kubectl to edit an object managed by Flux, all changes will be undone when kustomize-controller reconciles a Flux Kustomization containing that object.

In order for Flux to preserve fields added with kubectl, for example a label or annotation,

you have to specify a field manager named flux-client-side-apply.

For example, to manually add a label to a resource, do:

kubectl --field-manager=flux-client-side-apply label ...

Note that fields specified in Git will always be overridden, the above procedure works only for adding new fields that don’t overlap with the desired state.

Rollout restarts add a “restartedAt” annotation, which flux will remove, re-deploying the pods.

To complete a rollout restart successfully, use the flux-client-side-apply field manager e.g.:

kubectl --field-manager=flux-client-side-apply rollout restart ...

Should I be using Kustomize remote bases?

For security and performance reasons, it is advised to disallow the usage of

remote bases

in Kustomize overlays. To enforce this setting, platform admins can set the --no-remote-bases=true flag for kustomize-controller.

When using remote bases, the manifests are fetched over HTTPS from their remote source on every reconciliation e.g.:

# apps/podinfo/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- https://github.com/stefanprodan/podinfo/kustomize?ref=master

To take advantage of Flux’s verification and caching features,

you can replace the kustomization.yaml with a Flux source definition:

apiVersion: source.toolkit.fluxcd.io/v1

kind: OCIRepository

metadata:

name: podinfo

namespace: apps

spec:

interval: 60m

url: oci://ghcr.io/stefanprodan/manifests/podinfo

ref: # pull the latest stable version every hour

semver: ">=1.0.0"

Then, to reconcile the manifests on a cluster, you’ll use the ones from the Flux source:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: podinfo

namespace: apps

spec:

interval: 60m

retryInterval: 5m

prune: true

wait: true

timeout: 3m

sourceRef:

kind: OCIRepository

name: podinfo

path: ./kustomize

Should I be using Kustomize Helm chart plugin?

Due to security and performance reasons, Flux does not allow the execution of Kustomize plugins which shell-out to arbitrary binaries insides the kustomize-controller container.

Instead of using Kustomize to deploy charts, e.g.:

# infra/metrics-server/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: monitoring

resources:

- monitoring-namespace.yaml

helmCharts:

- name: metrics-server

valuesInline:

args:

- --kubelet-insecure-tls

releaseName: metrics-server

version: 3.12.0

repo: https://kubernetes-sigs.github.io/metrics-server/

You can take advantage of Flux’s OCI and native Helm features,

by replacing the kustomization.yaml with a Flux Helm definition:

apiVersion: source.toolkit.fluxcd.io/v1

kind: OCIRepository

metadata:

name: metrics-server

namespace: monitoring

spec:

interval: 1h

layerSelector:

mediaType: "application/vnd.cncf.helm.chart.content.v1.tar+gzip"

operation: copy

url: oci://ghcr.io/controlplaneio-fluxcd/charts/metrics-server

ref:

semver: "3.x" # auto upgrade to the latest minor version

---

apiVersion: helm.toolkit.fluxcd.io/v2

kind: HelmRelease

metadata:

name: metrics-server

namespace: monitoring

spec:

interval: 1h

chartRef:

kind: OCIRepository

name: metrics-server

values:

args:

- --kubelet-insecure-tls

What is the behavior of Kustomize used by Flux?

We referred to the Kustomize v5 CLI flags here,

so that you can replicate the same behavior using kustomize build:

---enable-alpha-pluginsis disabled by default, so it uses only the built-in plugins.--load-restrictoris set toLoadRestrictionsNone, so it allows loading files outside the dir containingkustomization.yaml.

To replicate the build and apply dry run locally:

kustomize build --load-restrictor=LoadRestrictionsNone . \

| kubectl apply --server-side --dry-run=server -f-

kustomization.yaml validation

To validate changes before committing and/or merging, a validation utility script is available, it runskustomize locally or in CI with the same set of flags as

the controller and validates the output using kubeconform.How to patch CoreDNS and other pre-installed addons?

To patch a pre-installed addon like CoreDNS with customized content,

add a shell manifest with only the changed values and kustomize.toolkit.fluxcd.io/ssa: merge

annotation into your Git repository.

Example CoreDNS with custom replicas, the spec.containers[] empty list is needed

for the patch to work and will not override the existing containers:

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: kube-dns

annotations:

kustomize.toolkit.fluxcd.io/prune: disabled

kustomize.toolkit.fluxcd.io/ssa: merge

name: coredns

namespace: kube-system

spec:

replicas: 5

selector:

matchLabels:

eks.amazonaws.com/component: coredns

k8s-app: kube-dns

template:

metadata:

labels:

eks.amazonaws.com/component: coredns

k8s-app: kube-dns

spec:

containers: []

Note that only non-managed fields should be modified else there will be a conflict with

the manager of the fields (e.g. eks). For example, while you will be able to modify

affinity/antiaffinity fields, the manager (e.g. eks) will revert those changes and

that might not be immediately visible to you

(with EKS that would be an interval of once every 30 minutes).

The deployment will go into a rolling upgrade and Flux will revert it back to the patched version.

Helm questions

Can I use Flux HelmReleases without GitOps?

Yes, you can install the Flux components directly on a cluster

and manage Helm releases with kubectl.

Install the controllers needed for Helm operations with flux:

flux install \

--namespace=flux-system \

--network-policy=false \

--components=source-controller,helm-controller

Create a Helm release with kubectl:

cat << EOF | kubectl apply -f -

---

apiVersion: source.toolkit.fluxcd.io/v1

kind: OCIRepository

metadata:

name: kube-prometheus-stack

namespace: monitoring

spec:

interval: 1h

layerSelector:

mediaType: "application/vnd.cncf.helm.chart.content.v1.tar+gzip"

operation: copy

url: oci://ghcr.io/prometheus-community/charts/kube-prometheus-stack

ref:

semver: "72.x"

---

apiVersion: helm.toolkit.fluxcd.io/v2

kind: HelmRelease

metadata:

name: kube-prometheus-stack

namespace: monitoring

spec:

interval: 1h

timeout: 10m

chartRef:

kind: OCIRepository

name: kube-prometheus-stack

install:

crds: Create

upgrade:

crds: CreateReplace

values:

alertmanager:

enabled: false

EOF

Based on the above definition, Flux will upgrade the release automatically when a new minor or patch version is available for the kube-prometheus-stack chart.

How do I set local overrides to a Helm chart?

Lets assume we have a common HelmRelease definition we use as a base and we

we need to further customize it e.g per cluster, tenant, environment and so on:

apiVersion: helm.toolkit.fluxcd.io/v2

kind: HelmRelease

metadata:

name: podinfo

namespace: podinfo

spec:

releaseName: podinfo

chart:

spec:

chart: podinfo

sourceRef:

kind: HelmRepository

name: podinfo

interval: 50m

install:

remediation:

retries: 3

and we want to override the chart version per cluster for example to gradually roll out a new version. We have couple options:

Using Kustomize patches

---

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: apps

namespace: flux-system

spec:

interval: 30m

retryInterval: 2m

sourceRef:

kind: GitRepository

name: flux-system

path: ./apps/production

prune: true

wait: true

timeout: 5m0s

patches:

- patch: |-

- op: replace

path: /spec/chart/spec/version

value: 4.0.1

target:

kind: HelmRelease

name: podinfo

namespace: podinfo

Using Kustomize variable substitution

apiVersion: helm.toolkit.fluxcd.io/v2

kind: HelmRelease

metadata:

name: podinfo

namespace: podinfo

spec:

releaseName: podinfo

chart:

spec:

chart: podinfo

version: ${PODINFO_CHART_VERSION:=6.2.0}

sourceRef:

kind: HelmRepository

name: podinfo

interval: 50m

install:

remediation:

retries: 3

To enable the replacement of the PODINFO_CHART_VERSION variable with a different

version than the 6.2.0 default, specify postBuild in the Kustomization:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: apps

namespace: flux-system

spec:

interval: 30m

retryInterval: 2m

sourceRef:

kind: GitRepository

name: flux-system

path: ./apps/production

prune: true

wait: true

timeout: 5m0s

postBuild:

substitute:

PODINFO_CHART_VERSION: 6.3.0

Flux v1 vs v2 questions

What are the differences between v1 and v2?

Flux v1 is a monolithic do-it-all operator; it has reached its EOL and has been archived. Flux v2 separates the functionalities into specialized controllers, collectively called the GitOps Toolkit.

You can find a detailed comparison of Flux v1 and v2 features in the migration FAQ.

How can I migrate from v1 to v2?

The Flux community has created guides and example repositories to help you migrate to Flux v2:

- Migrate from Flux v1

- Migrate from

.flux.yamland kustomize - Migrate from Flux v1 automated container image updates

- How to manage multi-tenant clusters with Flux v2

- Migrate from Helm Operator to Flux v2

- How to structure your HelmReleases